Spotlights

[Research News] vmTracking enables highly accurate multi-animal pose tracking in crowded environments

Dr. Hirotsugu Azechi and Dr. Susumu Takahashi, Graduate School of Brain Sciences, develop Virtual Marker Tracking (vmTracking) to study the movement patterns of multiple animals in crowded spaces.

Understanding the movement patterns of animals is crucial for analyzing complex behaviors. However, accurately tracking the poses of individuals in crowded and densely populated environments remains a major challenge. Azechi and Takahashi have developed ‘Virtual Marker Tracking’ (vmTracking), which assigns virtual markers to animals, enabling consistent identification and posture tracking even in crowded environments. Their findings offer a simple, effective solution for tracking multiple animals in complex spaces for precise behavioral studies.

Reference

Azechi H, Takahashi S (2025) vmTracking enables highly accurate multi-animal pose tracking in crowded environments. PLoS Biol 23(2): e3003002.

https://doi.org/10.1371/journal.pbio.3003002

https://research.doshisha.ac.jp/news/news-detail-70/

This achievement has also been featured in the “EurekAlert!.”https://www.eurekalert.org/news-releases/1074166

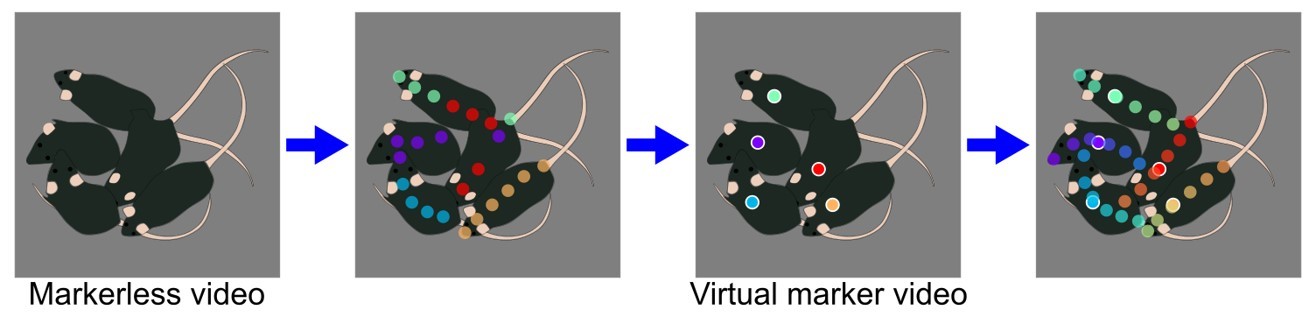

Title: vmTracking enables accurate identification in crowded environments

Caption: Conventional markerless tracking methods struggle with body part misestimations or missing estimates in crowded spaces. In vmTracking, markerless multi-animal tracking is performed on a video containing multiple individuals. The resulting tracking output may not always be fully accurate. However, since some of these markers are extracted and used as virtual markers for individual identification, high overall accuracy at this stage is not required. By applying single-animal DeepLabCut to the generated virtual marker video, more accurate pose-tracking results can be obtained compared to conventional methods.

Credit: Hirotsugu Azechi from Doshisha University, Japan

Image license: Original content

Usage restrictions: Cannot be used without permission

| Contact |

Department of Research Planning Telephone : +81-774-65-8256

|

|---|

Category

- Doshisha University Official Website:

- Top Page /Research /Current Student /